Nvidia GTC 2024 Live Blog: The Future of AI, Self-Driving Cars, and Software-Defined Vehicles

We’re tracking the latest automotive news and trends at Nvidia’s annual tech conference.

MotorTrend is live blogging from Nvidia’s GPU Technology Conference (GTC) 2024. Why? Because Ford, Jaguar Land Rover, Lucid, Mercedes-Benz, Polestar, and Volvo are all here, alongside some of the biggest names in tech. You probably know Nvidia from your personal computer, especially if you’re a gamer, as its GeForce graphical processing units (GPUs) have helped revolutionize the realistic look and feel of video games over the last 25 years. And now, Nvidia and its hardware and software solutions are coming for the future of the car, via artificial intelligence (AI) and autonomous vehicle (AV) technology. Nvidia GTC promises: “Brilliant Minds. Breakthrough Discoveries.” Let’s see if that’s the case.

How Ford Is Rolling Out AI Tools Without the Hype

5:00 PT - Between the hype and the technical jargon, artificial intelligence often feels like more sizzle than steak. Bryan Goodman, executive director of artificial intelligence at Ford, just gave a meaty talk about the practical uses for generative AI that was refreshingly honest and straightforward.

Goodman pointed out that AI hallucination presents a real problem for automakers. “We can’t afford to have them make stuff up,” he said. To prevent its large-language models (LLMs) from giving wrong answers, the algorithm is prompted to admit if it can’t find an answer—the kind of basic logic that should really be baked into every LLM. Where applicable, the models also cite the original sources so users can verify, clarify, or seek out additional detail.

Here’s five practical ways Ford is using generative AI today:

Owner’s manuals: Ford is building a tool that will allow customers to dig up information on their cars by asking a question conversationally, rather than leafing through a 600-page book. Finding the spare-tire jack, looking up the oil capacity, or checking the oil-change interval will soon be as simple as asking a simple question. Goodman demonstrated the tool using text search, but it’s easy to imagine it working as an in-car voice assistant.

Creating the tool isn’t as simple as uploading PDFs of owner’s manuals to train the LLM. Ford tried that and the algorithm struggled to interpret many of the tables and figures that contain the key numbers and details. What’s the right way to install a child seat? That depends on the height and weight of your kid. To make sure the model gives accurate, clear, and complete information, Ford also trained it using the source data that’s used to create the manuals. That data is structured in a way that the LLM can easily parse it.

Call centers: Ford receives more than 15,000 calls to its call centers every day. To understand its customers’ concerns, Ford needs to capture what those calls are about and whether customers are getting the help they’re looking for. Without AI, you’d have to rely on the representatives answering the calls to accurately summarize each interaction and file it properly, and then get other people to summarize the results. With artificial intelligence, each call is recorded, transcribed, and then depersonalized, before being summarized in a standardized format. From there the information can then be queried or searched. What were the top five call topics of the day? How many hours per topic? Simplifying those thousands of calls into high-quality data allows Ford to spot issues faster and understand whether they’re the result of a product problem, a communication problem, or some other breakdown.

Chatbots: According to Goodman, people really want to talk to the data. This will happen in a number of ways, though primarily through chatbots. Ford currently has more than 100 chatbots in use around the world. The danger is, without the chatbots talking to each other, you run the risk of the Ford app on your phone giving a different answer than Ford.com. To prevent this, Goodman envisions “unified chatbots” that will be able to communicate with each other and use retrieval augmented generation to provide consistent answers across all of Ford’s platforms.

Technician assistant: Similar to the owner’s manual tool, technicians will soon be able to ask a question in natural language and get answers pulled from a library of service manuals, diagnostic guides, and technical bulletins. One cool feature of this tool: Most shop manuals show you how to take something apart, but when you get to the end they simply instruct you to reverse the steps for reassembly. Ford’s technician assistant actually puts the step-by-step process in the correct order. Simple but effective.

Testing and development: Additionally, Goodman hopes that AI will help Ford with accelerated development, both in terms of evaluation and testing. Engineering cycles will quicken with the help of both human and AI feedback in the loop. He didn’t say so, but we’d bet this is in response to the Chinese automotive industry’s shocking ability to quickly develop new products. Goodman would also like Ford to use AI to come up with better methods to systematically measure performance over time. Time, as they say, will tell. –Jonny Lieberman and Eric Tingwall

Q+A with Nvidia Founder and CEO Jensen Huang: Tensions with China, AI Smarter Than Humans, and When Americans Will Get The Good Stuff

2:30 p.m. PT - While Nvidia’s GPU, cloud, AI, and software solutions have been adopted by many of the world’s leading car companies (and more recently tons of Chinese EV makers), the automotive industry is only a small part of their Nvidia's business. So it came as no surprise that there were no car questions asked or answered during the 90-minute session with Nvidia founder and CEO Jensen Huang during GTC 2024.

Huang’s introduction was essentially a recap of his keynote address from the previous day, which reinforced Nvidia’s focus on AI and introduced Blackwell, the company’s latest GPU supercomputer. Beyond the tech-industry-focused questions, there were two questions of broader interest.

One journalist asked about China, the geopolitical tensions with America, the competition between the countries across the tech industry, and how this affects Nvidia’s path forward. Huang was very clear in his answer, saying Nvidia needs to first “make sure we understand the policies and that we’re compliant.” Then, “do everything we can to create more resilience in our supply chain,” said Huang. He went on to say to that he is generally positive about how things will play out in China and that “the world is not adversarial.”

Another journalist asked Huang about the potential threat of artificial general intelligence (AGI) and whether he saw himself as the celebrated artist Da Vinci or as Robert J. Oppenheimer, the father of the atomic bomb. Huang paused and noticeably stiffened at the latter comparison before pointing at his Blackwell GPUs on display and saying, “These are not bombs.”

Quick context: AGI is defined as a type of AI is equal or better than human intelligence across a wide range of areas, and some believe that it can eventually be a threat to humanity. Huang, as the leader of a company that supports the rapid pace of AI development via its hugely fast and powerful GPUs and software, is often asked about the timeline for AGI to surpass humans.

Huang made clear the importance of specificity when asking questions about AGI; that if the concept is that AGI will be able to perform very well (better than 80 percent of the human population) across specific tests in math, reading, comprehension, etc., such as the SAT for high school or GMAT for grad school, then, yes, he thinks this level of AGI is inevitable—and within the next ten years. “We’re already two years into that 10 years …” said Huang. Interesting, but yes, we know you’re here for cars. If we had our way, we would have asked Huang the following:

"Jensen, during your keynote address at GTC 2024, you announced that BYD will be adopting Nvidia’s latest Drive Thor, Isaac robotics, and Omniverse platforms. This is alongside other partnerships that have already been announced between Nvidia and Chinese carmakers, including Xpeng, Li Auto, Great Wall Motors, and more. None of these companies sell cars in the US.

When will American drivers and consumers be able to experience all of Nvidia’s latest and greatest technology? And in what form—cars, trucks, ride-hailing services, robotaxis, autonomous delivery trucks? What is the hold up with American car brands and the American market?"

We sent this query to Nvidia PR and will update this post if they respond. –Ed Loh

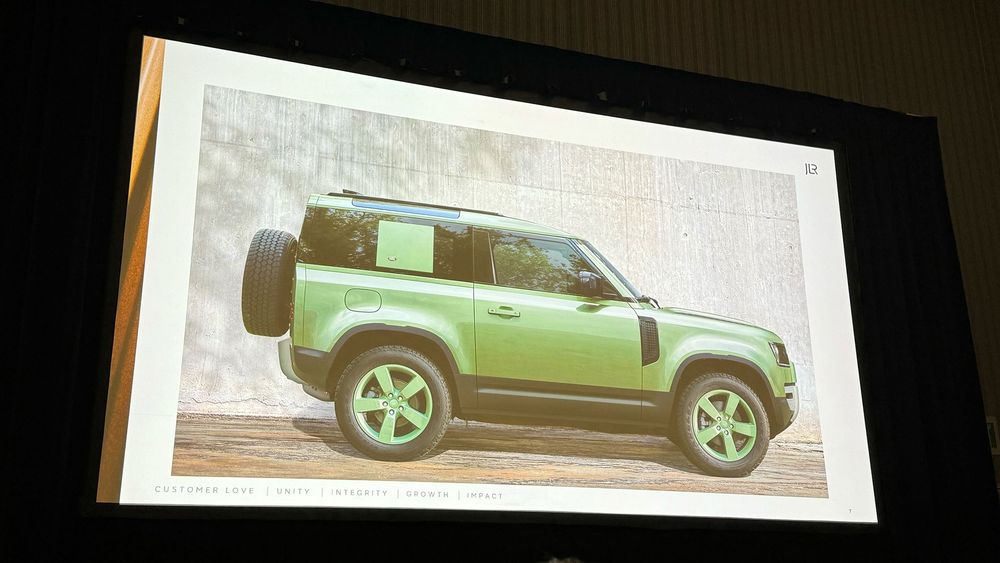

How JLR Is Using Generative AI to Transform the Luxury Experience

1:30 p.m. PT - We attended a lecture by Chrissie Kemp, the chief data and AI officer at JLR (Jaguar Land Rover) about how the rebranded British luxury brand consortium is using the latest advances in artificial intelligence to make its business better. If you missed it, Land Rover is no longer a thing, instead JLR is comprised of Range Rover (mission statement: "We lead by example."), Defender ("We embrace the impossible"), Discovery ("We never stop discovering"), and Jaguar ("We inspire self-expression"). AI, and specifically generative AI, is transforming the way JLR does things, from design to onboard vehicle technology, and from manufacturing to even sales. JLR and Kemp are at GTC because it will use NVIDIA’s DRIVE GPUs (Oran, Hyperion, and the upcoming Thor) to “deliver next-generation automated driving systems plus AI-enabled services and experiences for its customers.”

What does this mean for a luxury customer? Well, imagine that your Range Rover knows you really like independent coffee shops. The vehicle could automatically start suggesting and routing you to a café, or even go ahead and order and pay for your latte. The Range Rover could simply suggest it or you could prompt the vehicle by saying, “Hey, in about 30 minutes I need some caffeine and a bathroom break.” You could ask the vehicle to find you a good coffee shop near a charger. Going further, let’s say you get a phone call or an email and suddenly you need to fly somewhere. The vehicle can access your calendar, credit card, and frequent flyer information and book a ticket for you. Maybe even send a note to your spouse.

Autonomy is coming, although Kemp couldn’t say exactly when; that said, the eventual adoption of NVIDA’s DRIVE Thor chip makes the wait a software-related and not a hardware-related issue. JLR will be creating digital twins of its vehicles that are stored in the cloud and analyzed with AI. Based on data the owners generate via driving, the company will offer “proactive predictive maintenance.” We assume that means things like battery health, motor health, and things like HVAC and windows, although Kemp offered no specifics. When the vehicles are being repaired and a technician gets stumped, an AI chatbot can automatically appear on their tablet or laptop and help them to diagnose the issue. Speaking of chatbots, they will be employed on the various brands’ websites to help potential customers configure the cars—and imagine them in their lives. For example, a customer could ask the chatbot to show the new Defender with their kids and dog in the back.

The protection of customer data seemed to be front of mind for Kemp. She spoke about how the entire organization was angry with her as she paused the rollout of JLR AI until an ethical framework was in place. This is, of course, a major dilemma facing several industries—how do you secure the massive amounts of data that will be generated by not only SDVs but also generative AI? It’s too large a topic to even scratch the surface of in this entry. At least, seemingly thanks to Kemp, JLR is trying to get a handle on the problem. Now, can AI help JLR figure out what to do with the Jaguar brand? Only time will tell. –Jonny Lieberman

What’s Next in Generative AI: Key Takeaways on ChatGPT for Business from OpenAI’s COO

1:25 p.m. PT - One of the largest sessions at Nvidia GTC 2024 was a one-on-one conversation between OpenAI chief operating officer Brad Lightcap and Nvidia vice president of enterprise computing Manuvir Das. No surprise, given the focus of GTC and future of Nvidia is all about the new reality of generative artificial intelligence (gen AI), as pioneered by OpenAI and its revolutionary ChatGPT chatbot. How revolutionary? According to Lightcap, “90 percent of Fortune 500 companies are using ChatGPT in some form.” If that got your attention, here are four more takeaways from Lightcap and Das:

It’s very early. That's especially true for gen AI as a business (enterprise) tool. ChatGPT rocked the world with its debut in November of 2022. Per Lightcap: “We are still on the flat part of the curve—really early in the phase shift. Companies are still rigging and pipelining the infrastructure.”

On target setting: “Don’t overshoot; don’t try to swallow the ocean. But also don’t undershoot either. Don’t lack ambition,” said Lightcap, who went on to recommend companies start by constraining whatever problem they have, as specifically as possible. Only then should they apply AI and examine the value in the results.

Democratize access: Lightcap also recommends company leaders provide broad access to AI tools, since workers know the problems and can find solutions quickly organically. He recommends this versus spending time and energy delivering a perfectly finished chatbot or other AI tool.

Start with the gnarliest problem: Lightcap gave an example of how customer service is a good area in which to apply AI, as it’s a problem across many industries. “No [company] likes the quality [of their customer service]. They spend a lot of money on it. And it doesn’t fix the problem,” said Lightcap.

Specific car brands and automotive as an industry were not mentioned during this conversation, but given that more than a handful of car companies occupy Fortune’s list of global top 500 companies (including Toyota, Volkswagen, Stellantis, and others), it’s not hard to imagine how and where Lightcap’s advice on genAI could be applied. –Ed Loh

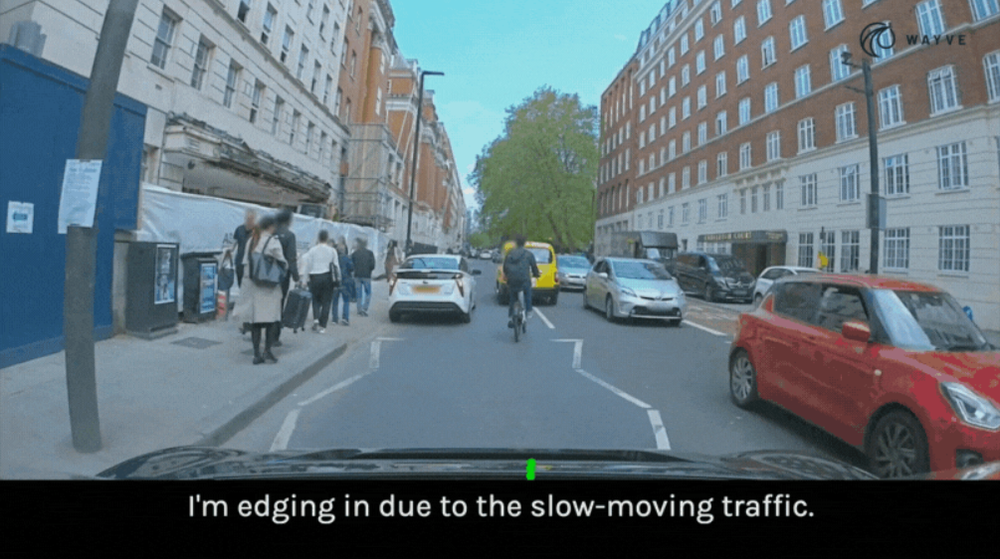

Would You Trust a Self-Driving Car More If It Told You What It Was Thinking?

12:55 pm PT - England-based autonomy startup Wayve is building an AI driving system that intuits the rules of the road from real-world experience rather than relying on hard-coded dos and don’ts. Whether or not that approach pays off, Wayve has another clever, AI-powered idea that could help today’s drivers become relaxed passengers in self-driving cars.

Wayve’s LINGO-1 is a driving commentator that explains why the autonomous system is slowing, accelerating, or turning. It can inform passengers, “I’m stopping for the pedestrian at the crosswalk” or “I’m slowing down for the upcoming left turn.” The program combines images and driving data with the reasoning of a large-language model in what Wayve calls a visual-language-action model (VLAM).

Wayve has also developed a similar text-based query system, where users can ask the AI driver to explain its actions and what it’s seeing in greater detail. For example, a passenger could have the system identify the top three hazards it’s tracking or explain why it’s stopped even though the road appears clear. Providing riders with this kind of transparency and explanation could help the autonomous-driving industry as it deals with broad public distrust. —Eric Tingwall

3 Ways the 2024 Volvo EX90 Previews the Future of the Car

10:05 a.m. PT - I had a conversation with Alwin Bakkenes, Volvo’s head of software, about how the upcoming EX90 electric SUV differs from Volvos on the road today. His answers highlight what makes a software-defined vehicle unlike today’s vehicles that run software but aren’t designed around it.

Central compute architecture: Most cars today rely on an electronic architecture built around domain controllers, where core functions (aka domains) such as the powertrain, the chassis systems, and the driver assistance features all run on their own computers. The EX90 is designed around a central compute or zonal architecture using Nvidia Orin and Xavier computers to manage all systems with one exception (more on that in the next paragraph). This centralized architecture significantly reduces wiring, allows for greater interconnectedness between systems, and makes it easier to update any aspect of the vehicle in the future.

Infotainment as a product: The EX90’s infotainment system runs on a Qualcomm Snapdragon computer independent of the Nvidia central compute architecture. Why? Volvo has developed its latest infotainment system as a standalone product, which allows it to be used across the lineup regardless of what the rest of vehicle computing architecture looks like. This should make it easier for software engineers to manage the workload. Rather than develop five different versions of an infotainment system for five different vehicles, they’ll use this single hardware and software “super set” in all vehicles for the foreseeable future. That should reduce the number of infotainment security patches and product improvements they’ll have to juggle.

You’re part of the development team: The EX90 will go on sale in the middle of 2024 with all the hardware necessary for Volvo’s Ride Pilot eyes-off-the-road highway driving assistant, but the feature won’t be available at launch. Volvo plans to enable it as a subscription service for California drivers at a later date. Engineers need to accumulate more test data before that can happen and they’re counting on customers to help. As early EX90 owners manually drive their cars, the lidar, radars, and cameras will collect data and send it to Volvo to validate Ride Pilot before it’s released. –Eric Tingwall

Generative AI Is Transforming Car Design

9:44 a.m. PT - We attended a demonstration of how generative AI can be used to design vehicles. The interesting takeaway is that the new generation of design tools can actually learn a designer’s personal style, so that seemingly simple line drawing inputs can be outputted in a tailored way. The AI can even be programmed for different brands, or branded design languages. Meaning that a seemingly simple sketch (what we saw demo’d was barely above a child’s doodle, pictured above) can be outputted in the personal style of designer X—the AI having been fed a few images to focus on prior. However, as you can make the training images whatever you like, the same sketch can be outputted as a Porsche. Change the images up, and the final product can look like a Lamborghini.

Designers will also be able to rapidly iterate specific parts of a car. We were shown an image of an original Ford Bronco (which the AI folks kept humorously referring to as a “Jeep”) and with a few mouse clicks individual pieces were able to be swapped out. Think grille, wheels, running boards, headlights, those sorts of parts. If you think about vehicles like the current Bronco, or Ford F-150, or Toyota Land Cruiser where the body panels stay the same but the grilles are changed based on trim levels, you start to see how and why AI can be such a powerful tool for designers. Using AI to create guided refinements (a.k.a. rapid prototypes) is a game-changing tool.

Another area where AI has the ability to improve car design is with rendering. It’s one thing to create an image of a vehicle. It’s another to render said vehicle in a lifelike, 3D environment. We saw a scenario where a designer created a sinister-looking, Bond-villain-lair-style garage, although it was only a 2D image. That moody garage was fed into the AI program, which was instantly able to turn it into an accurately lit 3D space, complete with a parked vehicle. Powerful, impressive stuff that will not only help existing designers, but should also empower a new generation for whom creating vehicles is a passion. –Jonny Lieberman

What Car Nerds Need to Know to Understand Nvidia GTC 2024

9:24 a.m. PT - If you love cars because of all the beautiful, brilliant complexity under the hood, you can no longer ignore software and computers. Soon you won’t be able to geek out on automotive technology without a basic understanding of computing fundamentals. Case in point, you’ll have a hard time following what we’re writing about from Nvidia’s GTC 2024 without understanding a few key ideas and products. Here’s a primer on the terms you’re going to see over and over in our coverage.

What’s a software-defined vehicle? EVs and autonomous vehicles make headlines, but neither would be possible without the larger software-defined-vehicle revolution that’s underway. A software-defined vehicle (SDV) is any vehicle in which interactions and key features are heavily influenced by the software. With an SDV, you might use your cell phone as a key, temporarily download more horsepower for a track day, or call on a remote technician to diagnose that weird vibration between 62 and 64 mph. The 2012 Tesla Model S is widely regarded as the first software-defined vehicle, and Tesla remains the company to emulate and beat for automakers ramping up their SDV efforts.

What is Nvidia Drive Thor? Drive Thor is the latest version of Nvidia’s central car computer designed to manage autonomous driving, driver-assistance features, and in-vehicle infotainment that’s being adopted by both automakers and self-driving-car companies. This system-on-a-chip (SoC) combines CPUs and GPUs and is rated at 1,000 teraflops (also written as TFLOPS or TOPS), which gives it four times the performance of its predecessor, Drive Orin. Think of a flop as the horsepower rating for a computer. It represents how many operations a computer can execute in a second. A teraflop is equal to a trillion flops, and a car computer with 1,000 teraflops has about 80 times the performance of a Microsoft Xbox Series X.

What’s a CPU? A central processing unit is the brain of a computer. It collects instructions and sequences them before issuing instructions to the other components a computer relies on so that operations occur in the correct order. It is designed for minimal delay in processing and executing a large number of commands sequentially.

What’s a GPU? Graphics processing units were originally developed to improve video and image quality, but today they’re also instrumental for complex work such as training and running artificial intelligence algorithms. While CPUs are optimized for carrying out instructions one at a time, GPUs are better suited to run multiple commands simultaneously.

What is Nvidia Omniverse? Nvidia’s 3D graphics software is used by automakers in a wide variety of ways, from powering online car configurators to simulating the operation of entire assembly plants. You can think of Omniverse as Nvidia’s platform for building the metaverse but instead of creating a virtual escape from reality filled with awkward, legless avatars, automakers are using it to create digital twins that help them represent their vehicles and understand how things work in the real world.

What’s a digital twin? A digital twin is a virtual model of something that exists in the physical world. It often involves an exacting level of detail to represent complex, dynamic environments, such as an auto factory. Our deep dive on digital twins reveals more about how automakers like BMW are using the technology today. –Eric Tingwall

How AI Supercomputing Power Is Coming to Your Car

Nvidia Founder/CEO Jensen Huang Announces Blackwell GPU and New Drive Thor Partnerships with BYD.

9:00 a.m. PT - Nvidia Corporation has been hosting GTC (a.k.a. the GPU Technology Conference) for the last 15 years, but it’s been five years since this event was held live. Graphics processing units (GPUs) are key to delivering realistic lighting and visual effects in video games and are the core technology behind Nvidia’s success over the last 30 years. Founded in a corner booth of a Denny’s restaurant in the heart of Silicon Valley in 1993, Nvidia is currently valued at over two trillion dollars, joining only a handful of companies that have achieved a market capitalization over a trillion dollars.

For GTC 2024, Nvidia is focused on artificial intelligence (AI) across three areas: automotive, robotics and a 3D graphics platform Nvidia calls the “Omniverse,” which co-founder and CEO Jensen Huang claimed supplied all the dazzling video simulations shown in his two-hour long keynote address.

Huang kicked off his presentation with a brief history lesson on Nvidia’s role in AI as we know it today, telling the thousands gathered inside San Jose’s SAP arena how, back in 2016, he hand-delivered Nvidia’s latest, greatest GPU at the time, to a startup known as OpenAI. In 2022, OpenAI would unleash ChatGPT onto the world, a launch that, per Huang, “captured the world’s imagination” and “helped be realize the importance and capabilities of artificial intelligence.”

Huang used this set up to debut Nvidia’s new massively powerful Blackwell GPU, that is not only the largest GPU ever developed, but one that be configured into an AI supercomputer (with other Nvidia components, notably its Grace CPU, rack and thermal management systems) to support the training and development of generative AI tools and system, by Nvidia and its customers. In a press release after Huang’s speech Nvidia outlined the companies and top executives endorsing Blackwell, including Alphabet and Google CEO Sundar Pichai, Amazon CEO Andy Jassy, Dell CEO Michael Dell, Meta CEO Mark Zuckerberg, Microsoft CEO Satya Nadella, Open AI CEO Sam Altman, Oracle Chairman Larry Microsoft, and Tesla/xAI CEO Elon Musk.

The only major automotive announcement came near the end of Huang’s presentation and was brief: BYD, the world’s largest EV maker, has selected Nvidia’s Drive Thor in-vehicle computing platform for its next generation vehicles. A post event press release outlined how would use Nvidia’s Drive Thor for generative AI applications, including future digital cockpit, Advanced Driver Assistance Systems (ADAS) and autonomous driving features.

BYD also intends to leverage Nvidia technology from “car to cloud” including using Nvidia’s Isaac robotics and Omniverse platforms to build “digital twins” at every state of the vehicle manufacturing, from the design of the factories all the way through to the retail experience and after sales support.

BYD wasn’t only Chinese OEM to announce it was joining forces with Nvidia at GTC 2024. Xpeng announced that Drive Thor will power is XNGP AI-assisted driving system in future vehicles, while Hyper, a luxury brand of GAC AION, announced it will use Drive Thor starting in 2025, to deliver level 4 autonomous driving capability. These three Chinese brands join Li Auto and Zeekr, who had previously announced plans to implement Drive Thor in future vehicles.

So, what does this all mean for you, the American car consumer? For now, not much. While Nvidia’s supercomputing power will soon be in the car, and involved in all stages of planning, design, manufacturing, sales, and support, the company’s slickest AI-powered hardware and software will hit the streets of Beijing and Shanghai before coming to Detroit or L.A. –Ed Loh

I used to go kick tires with my dad at local car dealerships. I was the kid quizzing the sales guys on horsepower and 0-60 times, while Dad wandered around undisturbed. When the salesmen finally cornered him, I'd grab as much of the glossy product literature as I could carry. One that still stands out to this day: the beautiful booklet on the Mitsubishi Eclipse GSX that favorably compared it to the Porsches of the era. I would pore over the prose, pictures, specs, trim levels, even the fine print, never once thinking that I might someday be responsible for the asterisked figures "*as tested by Motor Trend magazine." My parents, immigrants from Hong Kong, worked their way from St. Louis, Missouri (where I was born) to sunny Camarillo, California, in the early 1970s. Along the way, Dad managed to get us into some interesting, iconic family vehicles, including a 1973 Super Beetle (first year of the curved windshield!), 1976 Volvo 240, the 1977 Chevrolet Caprice Classic station wagon, and 1984 VW Vanagon. Dad imbued a love of sports cars and fast sedans as well. I remember sitting on the package shelf of his 1981 Mazda RX-7, listening to him explain to my Mom - for Nth time - what made the rotary engine so special. I remember bracing myself for the laggy whoosh of his turbo diesel Mercedes-Benz 300D, and later, his '87 Porsche Turbo. We were a Toyota family in my coming-of-age years. At 15 years and 6 months, I scored 100 percent on my driving license test, behind the wheel of Mom's 1991 Toyota Previa. As a reward, I was handed the keys to my brother's 1986 Celica GT-S. Six months and three speeding tickets later, I was booted off the family insurance policy and into a 1983 Toyota 4x4 (Hilux, baby). It took me through the rest of college and most of my time at USC, where I worked for the Daily Trojan newspaper and graduated with a biology degree and business minor. Cars took a back seat during my stint as a science teacher for Teach for America. I considered a third year of teaching high school science, coaching volleyball, and helping out with the newspaper and yearbook, but after two years of telling teenagers to follow their dreams, when I wasn't following mine, I decided to pursue a career in freelance photography. After starving for 6 months, I was picked up by a tiny tuning magazine in Orange County that was covering "The Fast and the Furious" subculture years before it went mainstream. I went from photographer-for-hire to editor-in-chief in three years, and rewarded myself with a clapped-out 1989 Nissan 240SX. I subsequently picked up a 1985 Toyota Land Cruiser (FJ60) to haul parts and camera gear. Both vehicles took me to a more mainstream car magazine, where I first sipped from the firehose of press cars. Soon after, the Land Cruiser was abandoned. After a short stint there, I became editor-in-chief of the now-defunct Sport Compact Car just after turning 30. My editorial director at the time was some long-haired dude with a funny accent named Angus MacKenzie. After 18 months learning from the best, Angus asked me to join Motor Trend as senior editor. That was in 2007, and I've loved every second ever since.

Read More